Hiera: A Hierarchical Vision Transformer without the Bells-and-Whistles

Reseacher from Meta have developed a new, simpler and faster hierarchical vision transformer called Hiera.

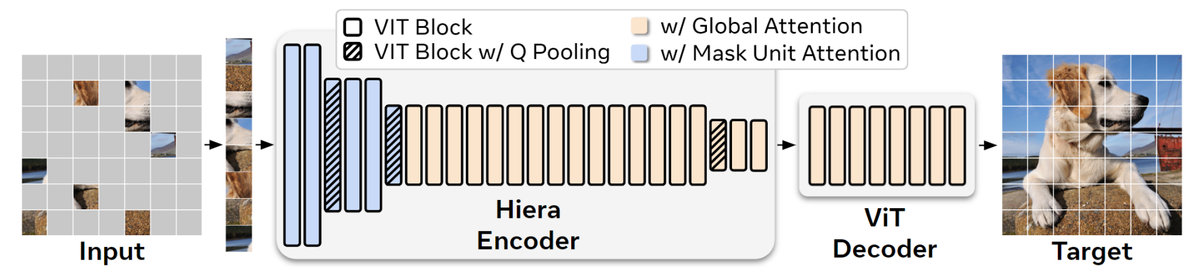

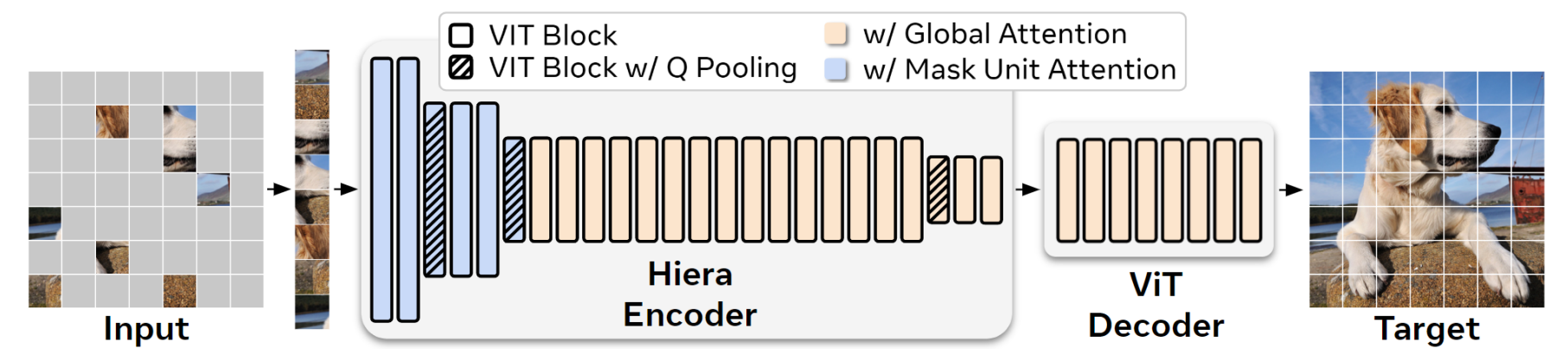

Scientists have developed a new, simpler and faster hierarchical vision transformer called Hiera. By pretraining with a strong visual pretext task (MAE), the researchers were able to strip out unnecessary components from previous models, resulting in a more accurate and faster transformer. The researchers evaluated Hiera on a variety of image and video recognition tasks.

Paper

Hiera: A Hierarchical Vision Transformer without the Bells-and-Whistles

Modern hierarchical vision transformers have added several vision-specificcomponents in the pursuit of supervised classification performance. While thesecomponents lead to effective accuracies and attractive FLOP counts, the addedcomplexity actually makes these transformers slower than their vani…

Source Code

GitHub - facebookresearch/hiera: Hiera: A fast, powerful, and simple hierarchical vision transformer.

Hiera: A fast, powerful, and simple hierarchical vision transformer. - GitHub - facebookresearch/hiera: Hiera: A fast, powerful, and simple hierarchical vision transformer.