The Technology Powering Apple's Vision Pro Strategy

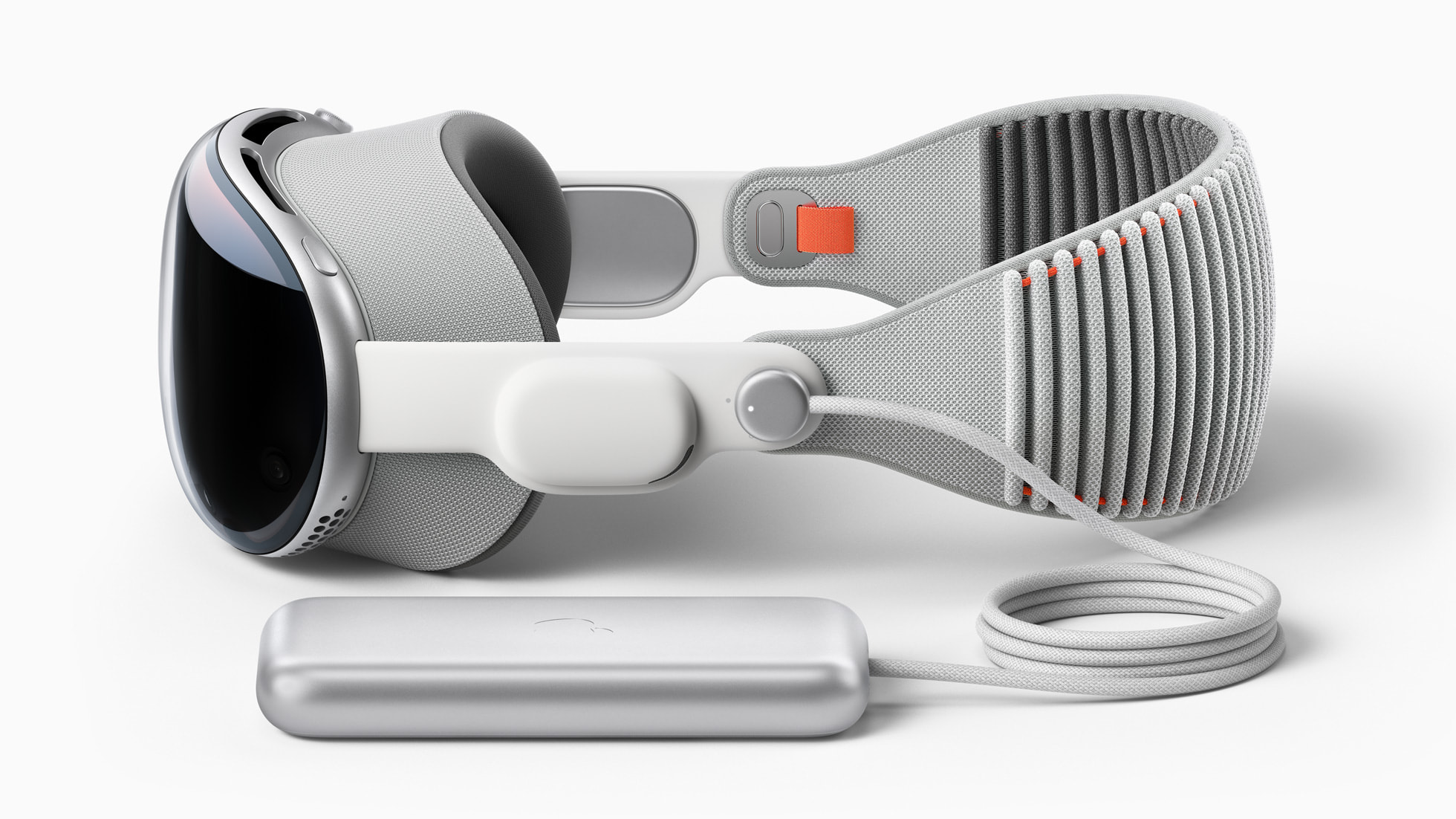

At the WWDC 2023 Apple showcased its VR headset, Apple Vision Pro, an impressive combination of cutting-edge hard- and software.

During the Worldwide Developers Conference (WWDC) in 2023, Apple took the wraps off its latest innovation - the Apple Vision Pro.

I held high expectations for this product, as it was being crafted by none other than Apple - a company widely regarded as the best maker of hardware in the world. Additionally, I am among the group of people who maintain a relatively optimistic outlook on VR. To my surprise, Apple not only met but exceeded my expectations in both regards: both the hardware and the accompanying experience were better than anything I thought possible.

This article delves into the technology behind a new device that Apple claims will usher in a major shift in the computing platform, something that Meta has been attempting to achieve for many years without success. Although details about the device are still scarce, by gathering information from various sources online, a more comprehensive understanding of its hardware and software components can be pieced together.

The Technology

The hardware seems faintly unbelievable — imagine having a mobile device with the computing power equivalent to Apple's mid-tier laptops, coupled with a remarkable sensor/camera array equipped with a dedicated co-processor. Additionally, it boasts high-resolution displays and top-notch optics, all contained within the compact size of a mobile phone.

Display and Optics

One major component of the experience is the display. The display system uses micro OLED so that Apple can fit 44 pixels in the space of an iPhone pixel. Each pixel is 7.5-micron wide and there are 23 million pixels across two panels that are the size of a postage stamp.

For the optics Apple partnered with Zeiss for glasses that magnetically attach to the lenses, with vision correction. A trio of lenses stretches them to span a wider field of view while still preserving sharpness and color.

The goal of this impressive hardware line-up is to overcome a limitation many previous headsets suffered from: a narrow field of view and obviously pixelated imagery.

System-on-a-Chip (SoC)

The Vision Pro's SoC features two complementary processors: an M2, Apple's current mid-tier laptop CPU, and the R1, a new real-time processor for processing sensor data with extremely low latency.

The R1 chip is taking all the sensors embedded into the headset to create precise head and hand tracking, along with real-time 3D mapping and eye-tracking. It's a real-time processor that deterministically processes sensor data within 12 milliseconds (eight times faster than the blink of an eye).

Apple claims to have determined that the human brain can detect even the slightest delay of 12 milliseconds between what you see and what your body expects to see, leading to issues like motion sickness in VR. The Vision's visual pipeline, however, is able to display images to your eyes in 12 milliseconds or less, which is quite impressive. It's worth noting that the image sensor takes around 7-8 milliseconds to capture and process what it sees, yet the Vision manages to process and display the image in front of your eyes in just 4 milliseconds.

Cameras & Sensors

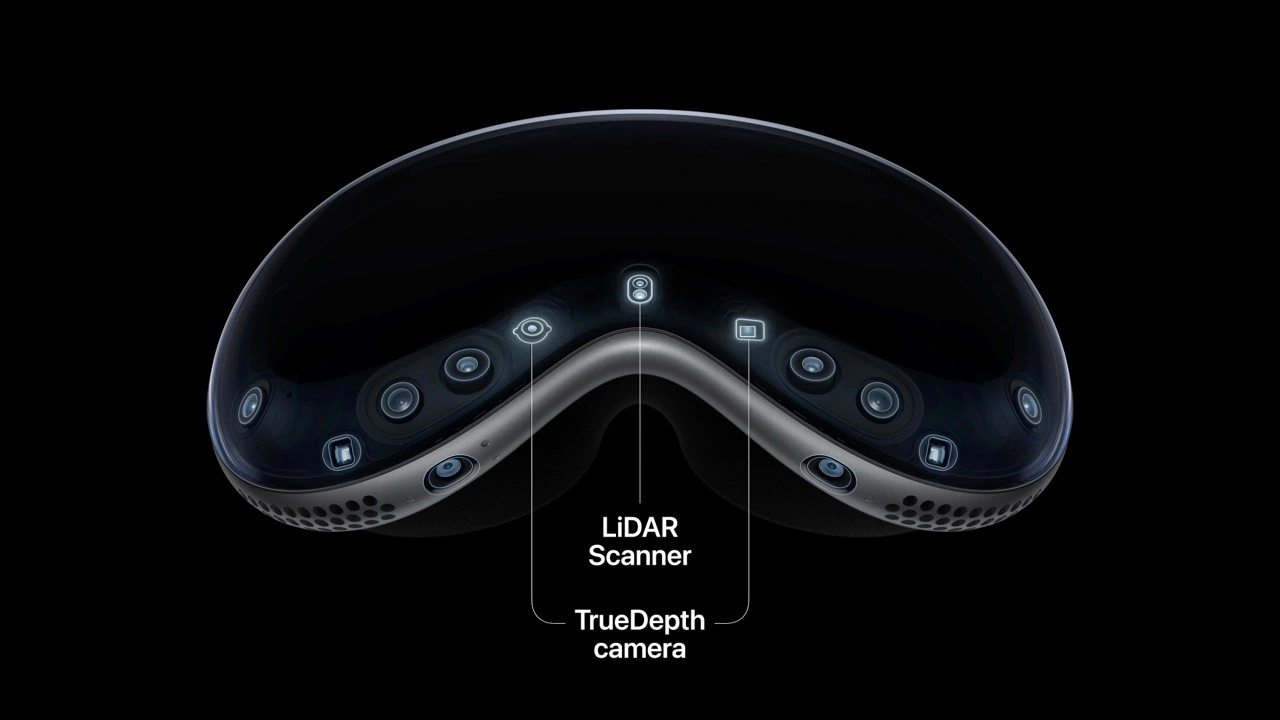

"It processes input from 12 cameras, five sensors and six microphones," said Mike Rockwell, head of Apple's Technology Development Group.

On the outside, there are two cameras pointing at the real world, and two cameras pointing downward to track your hands. The lidar scanner uses lasers to measure distances and create 3D maps of the environment. Meanwhile, the TrueDepth camera uses a combination of infrared light and machine learning algorithms to create highly accurate facial recognition used for secure login and authentication, as well as for fun features like animated emojis.

Inside the device, there are two IR cameras and a ring of LEDs to track your eyes. With this eye-tracking technology, Apple can display your eyes on the outside of the device thanks to a 3D display that sort of makes the device look transparent. Apple calls this feature EyeSight.

Controllers

The surprising thing about the Vision Pro's controllers is that there is none. At least not in the sense that e.g. Quest product line comes with physical controllers you use to interact with the device.

The input aspect of visionOS is particularly fascinating to me. The primary method of interaction remains pointing. In previous systems like Quest and its successors, pointing was achieved through indirect manipulation using a cursor controlled by a mouse or trackpad, along with clicking. However, on the iPhone and subsequent devices, pointing became more direct through touch, albeit with slightly less precision, and we no longer had "hover" interactions. In the case of Vision Pro and its successors, pointing is accomplished by looking and then "clicking" with your bare fingers, which are positioned in your lap.

This is a departure from the previous gesture tracking system in that it reduces the hands to sources of discrete gestures (actuate, flick), rather than continuous pointing. Hands are the buttons and scroll wheel on the mouse.

Apple envisions speech as the preferred method for quick text input, with a Bluetooth keyboard for longer inputs. They also plan to provide a virtual keyboard that can be operated using fingertips. However, based on my past experiences with similar virtual keyboards, I've found them to be consistently problematic. Due to the lack of tactile feedback, constant visual attention is required while typing, accuracy feels laborious, and fatigue sets in quickly. I would be genuinely intrigued if Apple has managed to overcome these challenges, although it would come as a surprise.

visionOS

visionOS is the name of Apple’s newest operating system — the OS that powers the Apple Vision Pro, Apple’s soon-to-launch augmented reality headset.

It is a real-time operating system, an operating system designed to provide deterministic and predictable responses to events or tasks within strict timing constraints. This is important to ensure deterministically low latency in the vision system, despite whatever other computing load might be on the system.

The user experience utilizes a time-sharing operating system based on iOS, running on the M2 chip. Additionally, there is a dedicated real-time subsystem powered by the R1 chip, ensuring that the outside world is continuously rendered within a remarkable 12 millisecond timeframe, even in the event of visionOS issues.

Conclusion

Apple has reveled an impressive piece of hardware, whether it actually leads to the paradigm shift it hopes for remains to be seen. Priced at $3'499, this first generation will see limited distribution. Apple expects ~900k units sold next year.

The other large player in the VR hardware space is obviously Meta. Given what we have seen from Apple today, the unfortunate reality for Meta is that they seem completely out-classed on the hardware front. Yes, Apple is working with a 7x advantage in price, which certainly contributes to things like superior resolution, but that bit about the deep integration between Apple’s own silicon and its custom-made operating system are going to very difficult to replicate for a company that has (correctly) committed to an Android-based OS and a Qualcomm-designed chip.